1. Introduction

Have you ever wanted to know if a current or potential customer opened a new office? Imagine how you could leverage that information to sell them more of your offerings.

Companies signal many of these activities, as well as their current situation, in job postings—from the tools they use, the teams they hire for, to new offices they open.

In this article, we provide a hands-on guide on how you can extract company signals in the form of new offices, locations, manufacturing facilities, or building sites from job postings.

Utilizing Techmap's free data feed, we will demonstrate the process of extracting and analyzing these signals to provide actionable insights for your business.

Outline of the Article

- Introduction

- Understanding company signals

- Extracting Data from Job Postings

- Identifying New Locations or Offices

- Practical Steps to Implement Extraction

- Conclusion

2. Understanding Company Signals

Company signals are indicators that provide insights into a company's activities, strategies, and future plans. They help identify sales opportunities, understand market trends, and develop targeted sales strategies. These signals can be derived from various sources, including job postings, press releases, financial reports, social media activity, and more.

Typical signals from companies contained in job postings are:

- Product-related: launch signals, strategy signals, collaboration signals, supply chain signals.

- Work-related: tool signals, tech signals, process signals.

- Financial-related: investment signals, revenue signals, salary signals.

- Growth-related: location signals, hiring signals, workforce signals, business signals, organizational signals.

Company signals offer relevant and important insights into the inner workings of companies and can support:

- Tailored Outreach: Identifying companies sending specific signals allows sales teams to tailor their pitches to meet specific needs and seize timely opportunities.

- Prioritized Outreach: Focusing resources on high-potential leads based on company signals ensures efficient use of time and effort.

- Competitive Advantage: Understanding competitor movements and market trends enables companies to adjust strategies proactively.

In the remainder of this article, we focus on location signals mentioning new offices, manufacturing facilities, or building sites that can signal geographic expansion or market entry. For example, a company opening and staffing a new office in a previously unserved country can indicate expansion plans and the need for products and services.

3. Extracting Data from Job Postings

3.1. Understanding Job Postings

Job postings are typically targeted at potential new employees and used to create awareness that a company exists, is hiring for a specific role, and provides insights into what the company does, plans, wants, uses, builds, etc. Their content mostly contains information such as:

- Company Information: Industry, company size, and location(s), goals, products, achievements, vision, mission, values, and culture.

- Job Information: Employee’s tasks, role, responsibilities, objectives, expected start time, work environment, and workplace.

- Requirement Information: Experience, industry background, skills, competencies, qualifications, certifications, working hours, work location, and travel requirements.

- Compensation Information: Salary range, bonus structure, benefits, perks, and incentives.

- Application Information: Required application documents, application deadline, contact information such as phone numbers, email addresses, or contact forms.

And job postings can also contain information on departments or teams the employee will join or work with, career paths, opportunities for advancement, personal budgets for hardware or conferences, or social activities of the team or company.

In the remainder of this article, we focus on location information from the company description and job overview to identify location signals indicating new offices, locations, manufacturing facilities, or building sites.

3.2. Origin of Job Postings

Companies distribute their job postings on various platforms such as their own website, career pages of their HR tool (e.g., Personio or SAP Successfactors), job boards (e.g., Monster or Stepstone), job aggregators (e.g., Indeed or CareerJet), job meta-search engines (e.g., Trovit or JobRapido), or social media platforms (e.g., LinkedIn or Facebook).

Our job posting data is sourced from many of these locations, cleaned, normalized, and stored in data files in AWS S3, which we offer on the AWS Data Exchange (ADX) platform. ADX is a service that allows customers to securely find, subscribe to, and use third-party data in the cloud. It enables businesses to leverage a wide variety of datasets for analytics, machine learning, and other data-driven applications.

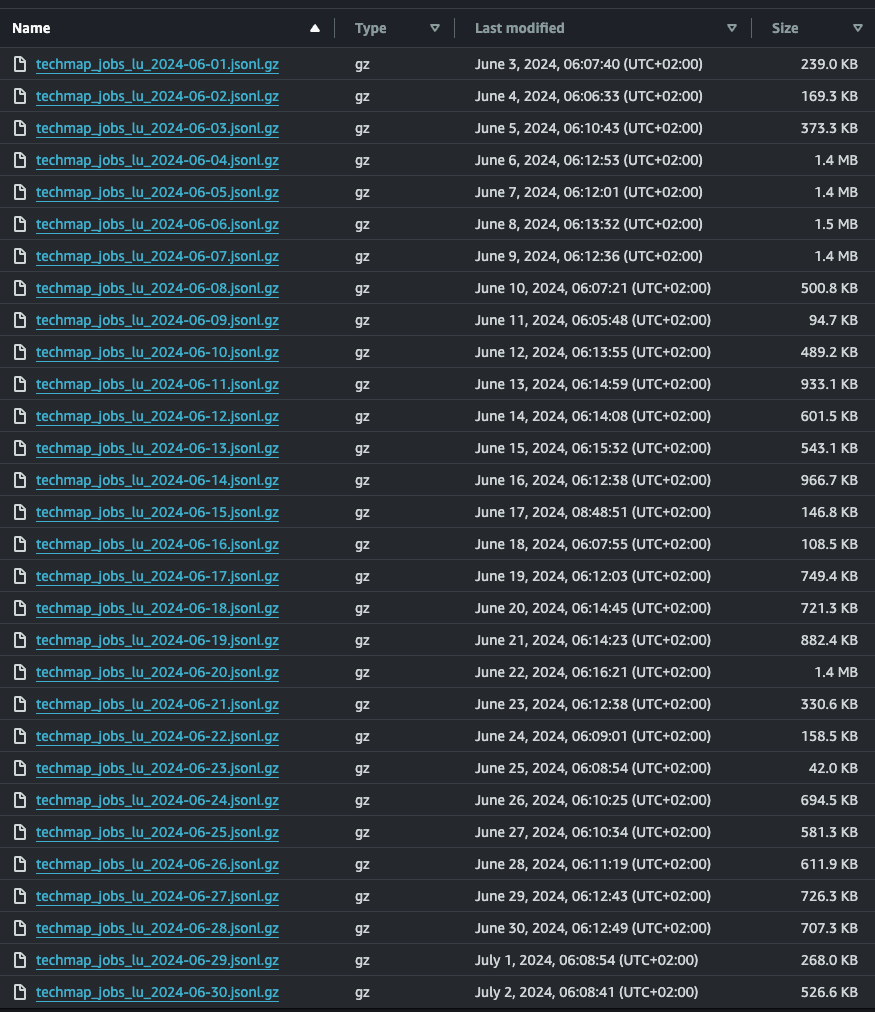

In the remainder of this paper, we will use the free Luxembourg data feed from Techmap on ADX, which gives access to all our historical data since January 2020. Specifically, we use the data from June 2024 with 14.7k job postings, with the main sources being LinkedIn, CareerJet, Indeed, Eures, and SmartRecruiters. For Luxembourg, the compressed data files typically range from 100KB to 1.5MB per day as shown in figure 1.

Figure 1: List of Techmap’s Luxembourg job posting data files in AWS S3

3.3. Data in Job Postings

Our data is exported daily in JSON format and stored in gzip compressed files. In the uncompressed files, each line contains a job posting in JSON format with the following fields:

Table 1: Data Dictionary for Techmap’s job postings (primary fields)

| Field name | Data Type | Description | Example |

|---|---|---|---|

| name | String | Title of the Job | Cyber Security Project Manager |

| url | String | The link to the job posting (often only valid for a month after dateCreated) | https://lu.linkedin.com/jobs/view/cyber-security-project-manager-at-wds-global-limited-3949517399 |

| dateScraped | Date | The day and time we found the job posting | 2024-06-15T18:57:58+0000 |

| dateCreated | Date | The day and time the job was published | 2024-06-15T10:28:56+0000 |

| location | JSON | Information on the location of the job | {"orgAddress": { "addressLine": "Luxembourg, Luxembourg, Luxembourg", "countryCode": "lu", "country": "Luxembourg", "state": "Luxembourg", "city": "Luxembourg", "geoPoint": {"lat": 49.61, "lng": 6.129627 } } } |

| company | JSON | Information on the company as found on the job posting’s page | {... "name": "State Street", "source": "linkedin_lu", "idInSource": "state-street", urls: {"linkedin_lu": "https://www.linkedin.com/company/state-street", …}, … }``` |

| text | String | Plain text version of the job description | ... We are looking for a skilled and experienced Cybersecurity Project Manager to lead customer’s cybersecurity initiatives ( location : Luxembourg). The ideal candidate will be a strategic thinker with a robust technical background , exceptional … |

4. Identifying New Locations or Offices

Now that we know how the job posting data is structured, we need to prepare the extraction algorithm and define what exactly we want to extract from this data.

In the following description, we use shell commands to download and process the data files and regular expressions (regex) to define the identification rules as specified in the Java programming language. If you’re using other programming languages such as Python or JavaScript, you might need to adapt them slightly.

As Luxembourg has multiple official languages, we can identify location signals in English, French, and German. In our dataset for June 2024, we found 49% to be English, 43% French, 6% German, and the rest in other languages. Furthermore, the regular expressions can identify location signals by using synonyms used by the hiring companies, such as “bureau” or “premise” instead of “office”. The following table 2 shows the regular expressions we used in the analysis below:

Table 2: Location signals with synonyms for "office"

| Type | Language | Regex | Examples |

|---|---|---|---|

| New Office | English | (new (\w+ )?(office|premise|location|facilit(y|ie)|site)s?) | “opening a new office in New York”, “new London location”, … |

| New Office | French | (nouveau (\w+ )?(bureau|locaux|facilités|sites)) | “nouveau bureau à Strassbourg”, “nouveau Lyon site”, … |

| New Office | German | (neue(n|m|s)? (\w+ )?(Büro|Office|Räum(en|lichkeiten)|standorte?| einrichtung(en)?)) | “im neuen Büro”, “im neues Office”, “in unseren neuen Räumlichkeiten”, … |

| We Expand | English | (we (are )?(expand(ing)?) to) | “we expand to” “we are expanding to” |

| We Expand | French | (nous (\w+ )?(étendons|développons?) (à|en)) | “Nous nous étendons à Allemagne”, “Nous nous développons en Allemagne” |

| We Expand | German | (wir (\w+ )?(expandieren) nach) | “Wir expandieren nach Frankreich”, … |

To easily use it in source code, we combine the individual regular expressions into the following complete regex - please note that you might need to use /…/i in Javascript or Python to ignore the letter case (Java uses the (?i) flag):

(?i)\b(

(new (\w+ ){0,2}(office|premise|location|facilit(y|ie)|site)s?)

|(nouveau (\w+ ){0,2}(bureau|locaux|facilité|site)s?)

|(neue(n|m|s)? (\w+ ){0,2}(Büro|Office|Räum(en|lichkeiten)|Standorte?|Einrichtung(en)?))

|(we (are )?(expand(ing)?) to)|(nous (\w+ )?(étendons|développons?) (à|en))

|(wir (\w+ )?(expandieren) nach)

)\b

Finally, to test it we can use the online tool Regex101 with the following text:

We are opening a new office in New York

Join our team at our new Los Angeles office

We are expanding to Canada and are looking for new talent

We are hiring for our new London location

Wir eröffnen ein neues Büro in Paris

Werden Sie Teil unseres Teams in unserem neuen Baden Badener Büro

Wir expandieren nach Frankreich und suchen neue Talente

Wir stellen für unser neues Berliner Büro ein

Nous ouvrons un nouveau bureau à Strassbourg

Rejoignez notre équipe dans notre nouveau Paris bureau

Nous nous étendons à Allemagne et recherchons de nouveaux talents

Nous recrutons pour notre nouveau Lyon site

5. Practical Steps to Implement Extraction

After we’ve prepared the regular expressions to identify the location signals, we’re now going to identify location signals in job postings. We first download the data files from AWS S3 and convert them to a more easily readable format. In our analysis, we used a combination of Unix shell commands, the jq tool to parse JSON data, and the grep command to identify signals using our regular expressions.

First, check that you’ve installed the shell commands aws, gzip, sort, uniq, and jq. If necessary install them using apt (e.g., on Ubuntu), yum (e.g., Amazon Linux), or brew (e.g., MacOS). If you do not have an AWS account you must create one - e.g., using the tutorial “Setting up AWS Data Exchange”.

In order to use our job postings you can follow AWS’s tutorial to subscribe to AWS Data Exchange for Amazon S3 but use our free Luxembourg data feed on ADX instead of their test product.

5.1. Downloading the job postings

After subscription, you will receive an access point alias from AWS to our S3 data bucket with the job posting data from Luxembourg using the prefix lu/. The access point alias should end with -s3alias and we use <YOUR_BUCKET_ALIAS> as a placeholder. In order to download the compressed data files to your computer you can use the following command with your individual bucket alias.

List all files from June 2024

aws s3api list-objects-v2 \

--request-payer requester \

--bucket <YOUR_BUCKET_ALIAS> \

--prefix 'lu/techmap_jobs_lu_2024-06-' | grep Key

Download one individual file

aws s3 cp \

--request-payer requester \

s3://<YOUR_BUCKET_ALIAS>/lu/techmap_jobs_lu_2024-06-01.jsonl.gz .

Download all files from June 2024 (requires 19 MB)

aws s3 sync \

s3://<YOUR_BUCKET_ALIAS>/lu/ . \

--request-payer requester \

--exclude "*" \

--include "techmap_jobs_lu_2024-06-*.jsonl.gz"

Decompress all files from June 2024 (results in 130 MB)

gzip -d *.gz

5.2. Filtering the job postings

Now that we have the job postings in textual files with JSON Lines format, we can program the identification of location signals. We loop over the files, test our regular expressions (regex), and output a small JSON file if a matching line is found. The JSON output for each matched line contains the job title, company name, location, and the matching snippets (i.e., keywords in context) to manually verify if we have a true or false positive.

This process of extracting job posting data helps in identifying location signals efficiently, providing valuable insights for businesses looking to track geographic expansion and market entry. For more details on job posting data extraction and location signal identification, explore our comprehensive guide and other related articles on our blog.

#!/bin/bash

# Define the regex as an environment variable

export REGEX='\b((new (\w+ ){0,2}(office|premise|location|facilit(y|ie)|site)s?)|(nouveau (\w+ ){0,2}(bureau|locaux|facilité|site)s?)|(neue(n|m|s)? (\w+ ){0,2}(Büro|Office|Räum(en|lichkeiten)|Standorte?|Einrichtung(en)?))|(we (are )?(expand(ing)?) to)|(nous (\w+ )?(étendons|développons?) (à|en))|(wir (\w+ )?(expandieren) nach))\b'

# Define and clear output file for the results

export OUTPUT_FILE="location_signals.txt"

printf '' > "$OUTPUT_FILE"

# Loop over all files matching the pattern

for file in techmap_jobs_lu_2024-06-*.jsonl; do

# Check if the file exists to avoid errors if no files match the pattern

if [[ -e "$file" ]]; then

# Decompress the file, filter JSON lines with jq, and extract fields

cat "$file" | jq -r --arg regex ".{0,20}$REGEX.{0,20}" '

select(

. | to_entries[] | select(.value | type == "string" and test($regex))

) | {

job_name: .name,

job_url: .url,

company_name: .company.name,

location: (.location.orgAddress.addressLine // .location.orgAddress.city),

matched_text: [

. | to_entries[] | select(.value | type == "string" and test($regex)) | .value | match($regex).string

] | unique | map("..." + . + "...") | join(", ")

}

' >> "$OUTPUT_FILE"

else

echo "No files matching the pattern found."

fi

done

Processing this code took only 56 seconds to analyze 14k job postings from Luxembourg, spread across 30 decompressed files. The resulting output file contains reduced data for each job posting where a location signal was identified. For example, the output looks like this:

…

{

"job_name": "Cross-Border Fund Services - Analyst",

"job_url": "https://lu.linkedin.com/jobs/view/cross-border-fund-services-analyst-at-deloitte-3941472766",

"company_name": "Deloitte",

"location": "Luxembourg, Luxembourg, Luxembourg",

"matched_text": "...over our remarkable new premises, located in Cloche ..."

}

…

{

"job_name": "Trade Finance Professional",

"job_url": "https://lu.linkedin.com/jobs/view/trade-finance-professional-at-euro-exim-bank-3941632477",

"company_name": "Euro Exim Bank",

"location": "Luxembourg",

"matched_text": "...on and are planning new offices in major financial ..."

}

…

To extract unique companies from job postings, regardless of the number of postings they placed, use the following code snippet:

cat location_signals.txt | grep company_name | sort | uniq

And get a result that looks like this:

"company_name": "Allianz Global Investors",

"company_name": "Amazon EU Sarl - A84",

"company_name": "Amazon EU Sarl",

"company_name": "Amazon",

"company_name": "ArcelorMittal",

"company_name": "Astel Medica",

"company_name": "Deloitte",

"company_name": "Euro Exim Bank",

"company_name": "Koch Global Services",

"company_name": "Koch Industries",

"company_name": "Luxscan Weinig Group",

"company_name": "MD Skin Solutions",

"company_name": "ROTAREX",

"company_name": "Thales",

"company_name": "e-Consulting RH, Sourcing & Recrutement de Profils pénuriques",

"company_name": "myGwork - LGBTQ+ Business Community",

Now, we can match the results with our own company data, such as customer or competitor information, and begin handling these new signals effectively. This process allows for more targeted and strategic decision-making, enhancing your competitive edge.

Depending on your internal processes, you may choose to store the data in a database rather than in JSON or CSV format for more efficient post-analysis activities. Alternatively, you can send the information directly to your sales department via email, ensuring timely and actionable insights.

5.3. Analyzing the Results

In summary, in under a minute, we identified 144 job postings in Luxembourg mentioning new offices in June 2024. After refining the data, we pinpointed 16 unique companies with location signals, showcasing the effectiveness of our extraction method.

As a result, we extracted one company-specific location signal per 918 job postings in Luxembourg. This analysis indicates that in June 2024, we had 12 countries that could provide at least 100 and 43 countries with more than 10 location signals per month.

6. Conclusion

In this article, we demonstrated how to extract and analyze location signals from job postings using Techmap’s free data feed. By leveraging these signals, companies can gain valuable insights into their competitors’ activities, identify sales opportunities, and develop targeted sales strategies.

Location signals are just one example of how job postings can be used to gather competitive intelligence. By applying similar techniques, companies can extract and analyze a wide range of signals to gain a comprehensive understanding of their market environment.

In our example for location signals in Luxembourg, we identified 144 job postings mentioning a location change for 16 unique companies in June 2024. Additional analysis by us for other months and other countries such as the USA shows a similar ratio. For example, for June 2024 we found 1241 signals in the USA with 918k job postings.

Leveraging machine learning and AI can further enhance this process by automating the extraction, increasing precision, and uncovering deeper insights. By integrating advanced analytics, businesses can identify even higher-quality leads more efficiently, driving more targeted and effective outreach strategies.

If you are interested in further exploring the potential of job postings for competitive intelligence, we encourage you to experiment with our data feed and source code provided in this article. Happy analyzing!